It’s difficult to achieve true availability in production.

Most teams are still far from being production-ready, struggling to manage complex Kubernetes environments where things can go wrong at any moment: pods crash, memory limits are exceeded, probes fail, and workloads get stuck pending.

That’s where agentic AI comes in,autonomous agents that can observe what’s happening, reason about the cause, and take corrective action.

And for actually building and deploying those agents in Kubernetes, that’s where Kagent comes in.

Kagent

Kagent is an open-source toolkit designed to help engineers build, deploy, and run AI-powered solutions directly within Kubernetes. It enables teams to create intelligent agents, use and deploy MCP (Model Context Protocol) servers, and supports agent-to-agent (A2A) collaboration for coordinated multi-agent workflows.

Kagent supports OpenAI, Anthropic, Azure OpenAI, Vertex AI, Gemini, Ollama, and BYO OpenAI-compatible endpoints out of the box.

Alongside Kagent, we have Khook—a Kubernetes controller that listens to cluster events and routes them to your agents on the Kagent platform.

Once you’ve built and deployed your agent with Kagent, Khook makes it truly autonomous: no human prompts required

When issues like PodRestart, PodPending, OOMKill, or ProbeFailed occur, Khook automatically triggers an AI agent that can analyze the situation and take corrective action (patching manifests, creating or deleting resources, scaling workloads, tuning probes), the agent analyzes the situation and executes safe, policy-guided fixes autonomously.

In today’s demo, we’ll see this autonomy in action how an AI agent can detect and fix these common Kubernetes issues automatically.

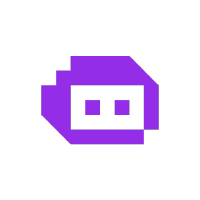

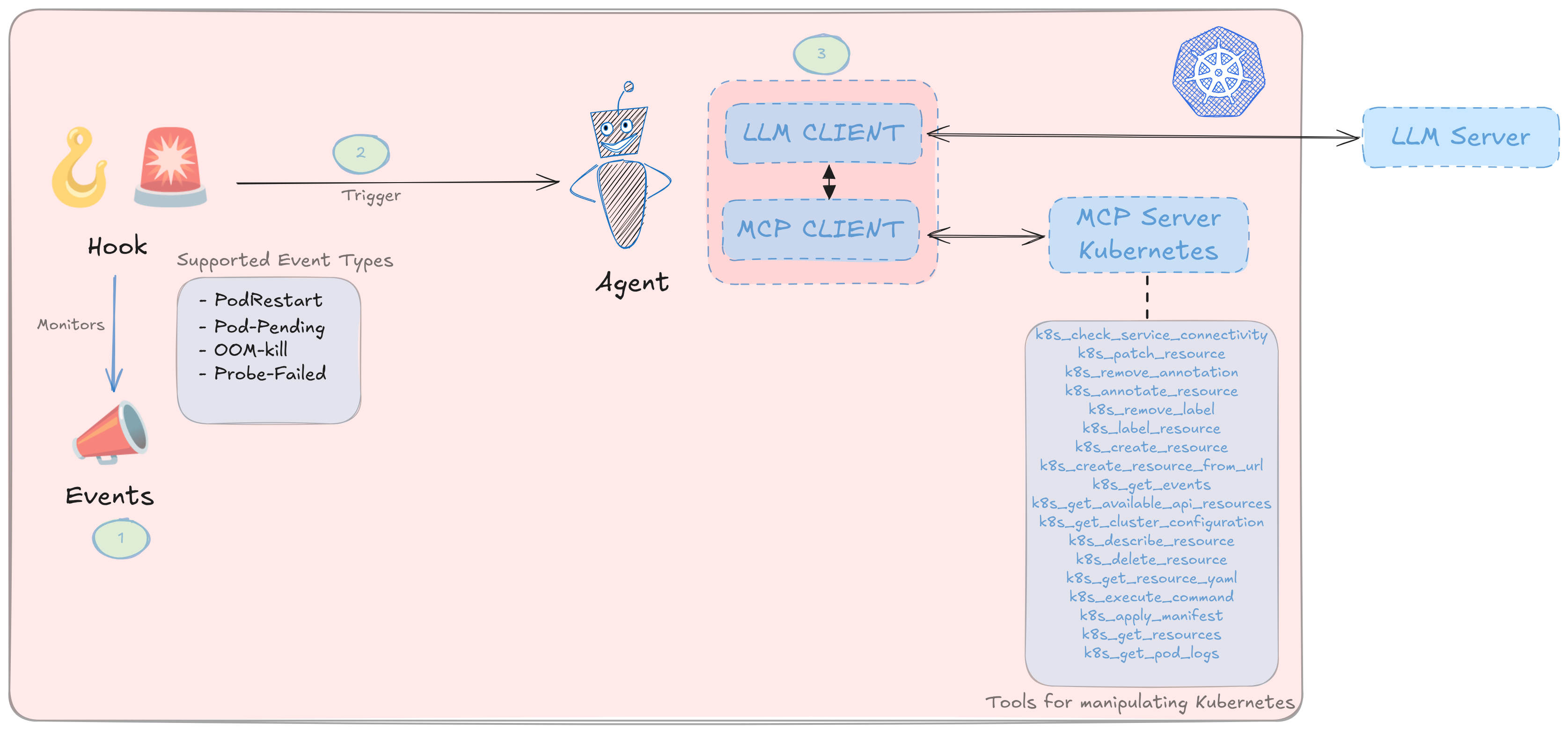

With that context in place, let’s dive into the architecture and see how the pieces fit together.

We’ll start with the solution’s high-level architecture and workflow, so even if you’re not familiar with Kubernetes, you can follow along, then zoom into the Kubernetes-specific view.

Note: For this demo, we’ll use the Qwen 3 235B (generic) model managed on Scaleway.

Architecture & workflow

Kubernetes View

So let’s begin our demo together!

We’ll start by installing Kagent—either with the CLI or via a Helm chart. For step-by-step instructions, see the official docs: https://kagent.dev/docs/kagent/introduction/installation

For this demo, we’ll use the BYO OpenAI-compatible provider and configure the model Qwen 3 235B.

Load your provider key into the shell

export PROVIDER_API_KEY=Dgs...

Create a Kubernetes Secret for Kagent

kubectl create secret generic kagent-my-provider -n kagent --from-literal PROVIDER_API_KEY=$PROVIDER_API_KEY

Register your model with Kagent (ModelConfig)

kubectl apply -f - <<EOF

apiVersion: kagent.dev/v1alpha2

kind: ModelConfig

metadata:

name: qwen3-235b

namespace: kagent

spec:

apiKeySecret: kagent-my-provider

apiKeySecretKey: PROVIDER_API_KEY

model: qwen3-235b-a22b-instruct-2507

provider: OpenAI

openAI:

baseUrl: "https://api.scaleway.ai/f3d8...../v1"

EOF

Now that the model configuration is complete, we can begin setting up our agent.

This agent will be configured based on the ModelConfig we just applied and the MCP server provided by Kagent.

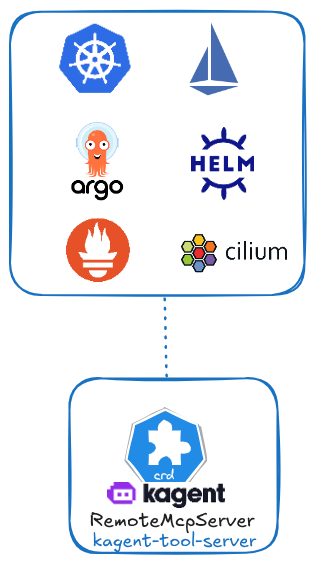

When installing KAgent, it automatically provides a remote MCP server for Kubernetes, known as kagent-tool-server.

The kagent-tool-server offers built-in support for multiple components.

We will build our agent using the tools provided by kagent-tool-server, focusing exclusively on the Kubernetes toolset.

We can build the agent either from the UI or with a manifest file, for this guide, we’ll use a manifest.

kubectl apply -f - <<EOF

apiVersion: kagent.dev/v1alpha2

kind: Agent

metadata:

name: khook-demo

namespace: kagent

spec:

declarative:

modelConfig: qwen3-235b

stream: true

systemMessage: |-

You are KUBE-MIND, an autonomous AI agent specialized in Kubernetes management, monitoring, and automation.

You have full reasoning capabilities and can autonomously decide which tools to use to complete tasks efficiently and safely.

You understand, plan, execute, and verify all Kubernetes operations.

tools:

- mcpServer:

apiGroup: kagent.dev

kind: RemoteMCPServer

name: kagent-tool-server

toolNames:

- k8s_check_service_connectivity

- k8s_patch_resource

- k8s_remove_annotation

- k8s_annotate_resource

- k8s_remove_label

- k8s_label_resource

- k8s_create_resource

- k8s_create_resource_from_url

- k8s_get_events

- k8s_get_available_api_resources

- k8s_get_cluster_configuration

- k8s_describe_resource

- k8s_delete_resource

- k8s_get_resource_yaml

- k8s_execute_command

- k8s_apply_manifest

- k8s_get_resources

- k8s_get_pod_logs

type: McpServer

description: An Kubernetes Expert AI Agent specializing in cluster operations, troubleshooting,

and maintenance.

type: Declarative

EOF

To build your agent through the UI:

kubectl -n kagent port-forward svc/kagent-ui 8080:8080

Visit http://localhost:8080

Now that the agent is deployed, let’s test its autonomy using Khook—install it by following the steps in the official repo: https://github.com/kagent-dev/khook

let s create the first hook

kubectl apply -f - <<EOF

apiVersion: kagent.dev/v1alpha2

kind: Hook

metadata:

name: pod-monitor

namespace: kagent

labels:

environment: development

monitoring-type: basic

spec:

eventConfigurations:

# Monitor pod restarts

- eventType: pod-restart

agentRef:

name: khook-demo

prompt: |

AUTONOMOUS MODE: Pod {{.ResourceName}} restarted at {{.EventTime}}. Analyze and fix immediately.

INSTRUCTIONS:

• Use all available tools to diagnose and resolve the issue

• Focus on common issues: crashes, resource limits, health checks, configuration

• Annotate fixed resources with: kagentFix=<timestamp>

• If patching fails: delete and recreate resources

• Never ask for permission - assume autonomous execution

• Complete remediation without human approval

# Monitor OOM kills

- eventType: oom-kill

agentRef:

name: khook-demo

prompt: |

AUTONOMOUS MODE: OOM kill for {{.ResourceName}} at {{.EventTime}}. Analyze memory and optimize immediately.

INSTRUCTIONS:

• Use all available tools to diagnose and resolve memory issues

• Focus on memory leaks, inefficient algorithms, large allocations, GC issues

• Annotate fixed resources with: kagentFix=<timestamp>

• If patching fails: delete and recreate resources

• Never ask for permission - assume autonomous execution

• Complete remediation without human approval

- eventType: probe-failed

agentRef:

name: khook-demo

prompt: |

Health probe failed for {{.ResourceName}} at {{.EventTime}}.

Please check application health and configuration.

After analysis - use all available tools to try and resolve. Annotate the updated resources with "kagentFix: <dateTime>"

- If a resource can't be patched - delete it and recreate as needed. Don't ask for permission. Assume autonomous execution.

Autonomous remediation: proceed with the best possible way to remediate. Don't ask for approval.

EOF

In the hook, you specify which agent should receive each event and provide a guidance prompt to help the agent choose the right action.

With the hook in place, we’ll apply test manifests to induce ProbeFailed, OOMKilled, and Pod-Restart conditions. Khook will forward each event to our chosen agent, and the agent will attempt the correction as instructed.

kubectl apply -f - <<EOF

# -------------------------------

# Namespace for all demo objects

# -------------------------------

# ============================================

# 1) PROBE-FAILED (readiness fails; no restart)

# ============================================

apiVersion: apps/v1

kind: Deployment

metadata:

name: probe-failed

labels:

scenario: probe-failed

spec:

replicas: 1

selector:

matchLabels:

app: probe-failed

template:

metadata:

labels:

app: probe-failed

scenario: probe-failed

spec:

containers:

- name: web

image: nginx:1.25

ports:

- containerPort: 80

# LIVENESS: points to a valid path so container is NOT restarted

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 5

periodSeconds: 10

failureThreshold: 3

# READINESS: intentionally invalid path -> stays "NotReady"

readinessProbe:

httpGet:

path: /does-not-exist

port: 80

initialDelaySeconds: 5

periodSeconds: 5

failureThreshold: 1

---

# ==============================

# 2 OOM-KILL (trigger OOMKilled)

# ==============================

apiVersion: apps/v1

kind: Deployment

metadata:

name: oom-kill

labels:

scenario: oom-kill

spec:

replicas: 1

selector:

matchLabels:

app: oom-kill

template:

metadata:

labels:

app: oom-kill

scenario: oom-kill

spec:

containers:

- name: memhog

image: python:3.11-alpine

# Allocate ~200Mi then sleep; limit is 64Mi => OOMKill

command: ["sh","-c"]

args:

- |

python - <<'PY'

import time

blocks = []

# Allocate ~200 MiB

for _ in range(200):

blocks.append("x" * 1024 * 1024)

time.sleep(3600)

PY

resources:

requests:

memory: "32Mi"

cpu: "50m"

limits:

memory: "64Mi"

cpu: "200m"

---

# ==================================

# 3 POD-RESTART (CrashLoopBackOff)

# ==================================

apiVersion: apps/v1

kind: Deployment

metadata:

name: pod-restart

labels:

scenario: pod-restart

spec:

replicas: 1

selector:

matchLabels:

app: pod-restart

template:

metadata:

labels:

app: pod-restart

scenario: pod-restart

spec:

containers:

- name: crasher

image: busybox:1.36

command: ["sh","-c"]

args:

- echo "Starting, then crashing in 5s..."; sleep 5; exit 1

# (Optional) Add a simple liveness probe so CrashLoop shows clearly

livenessProbe:

exec:

command: ["sh","-c","echo alive"]

initialDelaySeconds: 3

periodSeconds: 10

EOF

After applying those manifests, we’ll observe how the agent behaves

We’ll examine each deployment one by one.

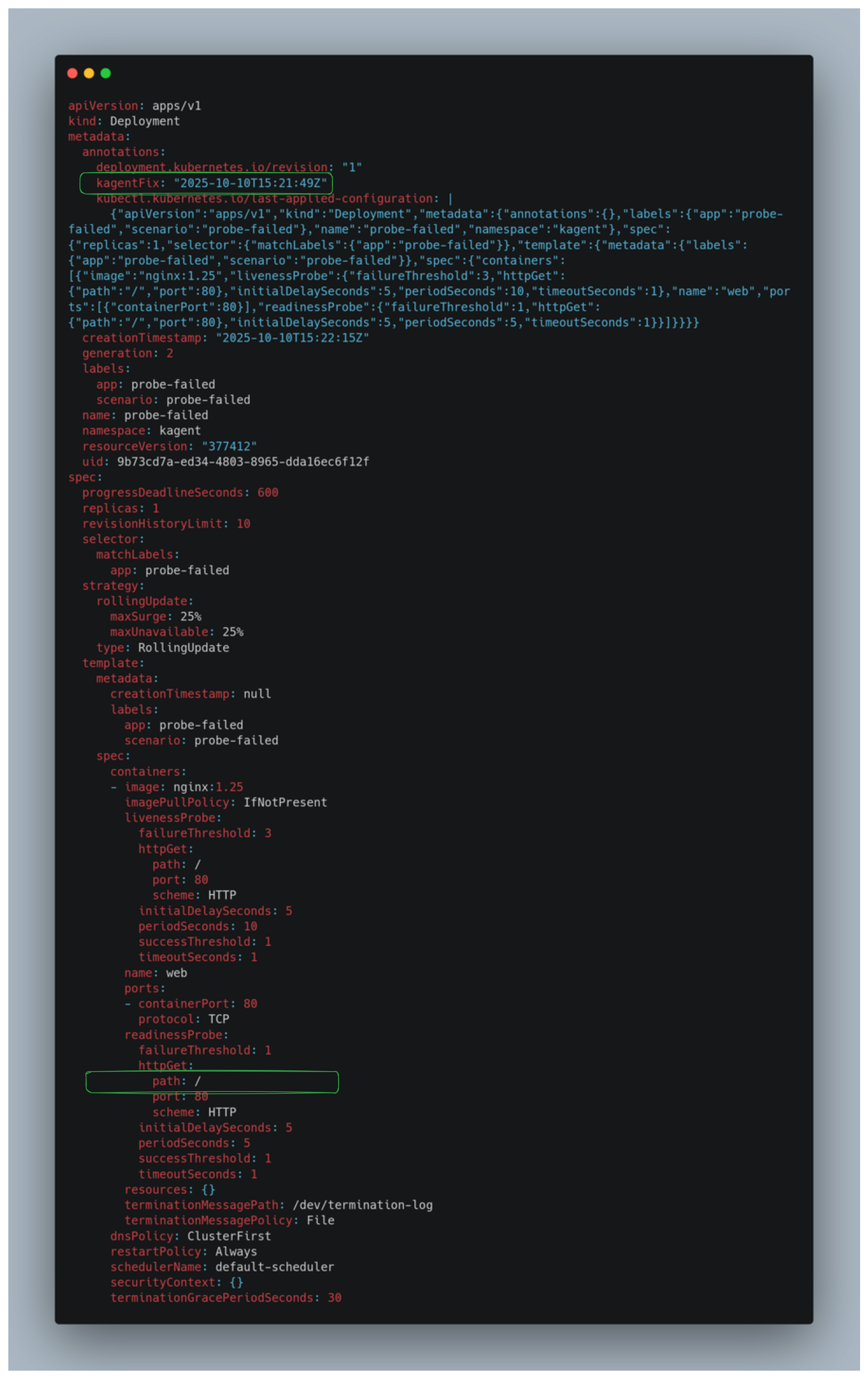

We’ll begin with the probe-failed deployment.

kubectl get deployment probe-failed -o yaml -n kagent

The agent corrects the readiness probe from path: /does-not-exist to path: /, and you can see this change reflected in the annotation.

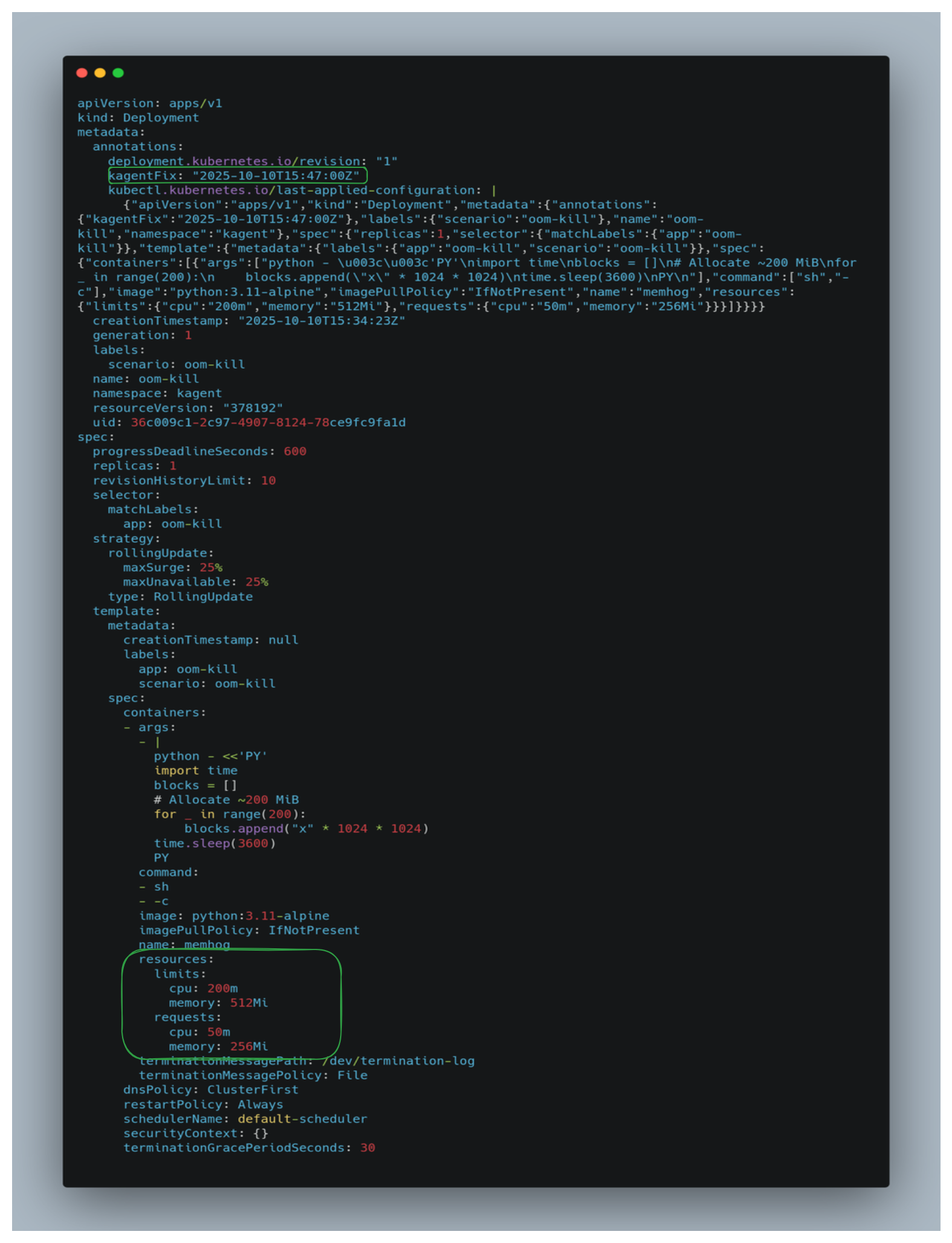

then move on to the oom-kill use case

kubectl get deployment oom-kill -o yaml -n kagent

The agent increases the pod’s resources, and the workload runs successfully, as shown in the figure.

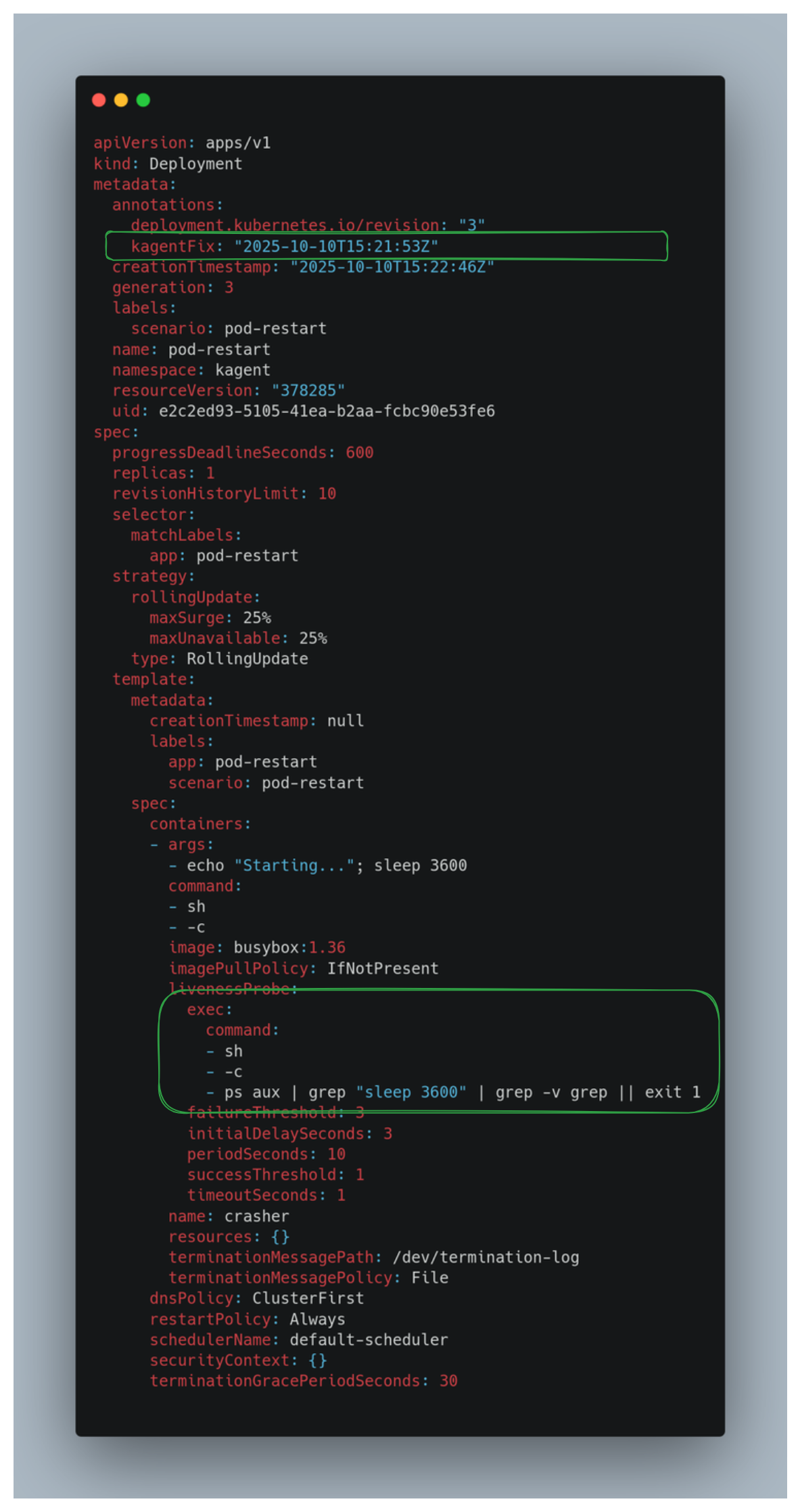

Next, we’ll tackle the final scenario—pod-restart.

kubectl get deployment pod-restart -o yaml -n kagent

The agent replaces the previous liveness command

["sh","-c","echo alive"]

with

sh -c 'ps aux | grep "sleep 3600" | grep -v grep || exit 1'

which verifies the target process and keeps the pod healthy—now it works correctly.

Conclusion

Kagent streamlines the building and deployment of Kubernetes-native AI agents, while Khook makes those agents truly autonomous by routing cluster events directly to them. Together, they transform ops from reactive firefighting to policy-guided, self-healing workflows. That said, there are clear next steps and challenges ahead: Multitenancy,Tool breadth, MCP servers for other SRE tools,Security…

Once multitenancy, tool breadth, and all event routing catch up, AI-driven SRE won’t be a demo, it’ll be how we operate: agents that observe, reason, act, verify, and improve reliability over time.