We always hear about controllers, operators, and reconcilers when we talk about Kubernetes, they sound mysterious, almost magical. Everyone says “the controller manages this” or “the operator automates that”, but what exactly are these things? What’s inside a controller? How does it know what to do? And how can we build one ourselves?

In this blog, we’re going to unpack all of that — step by step. We’ll start by understanding what a controller really is inside Kubernetes, what concepts it’s built on, and why it’s so fundamental to how Kubernetes works. Then, we’ll move from theory to practice, we’ll actually build our own controller using Kubebuilder, a powerful framework that makes it much easier to write custom controllers and operators.

Understanding the Basics — Operator, Controller, and CRD

Kubernetes Operator When people talk about a Kubernetes Operator, they mean a smart piece of software that automates tasks inside Kubernetes things a human administrator or DevOps engineer would normally do by hand. In simple terms, an Operator is like a robot administrator 🤖 that knows how to manage a specific kind of application inside your cluster. It can deploy it, monitor it, back it up, and even fix it when something goes wrong.

But you might be wondering:

“Wait… Kubernetes already runs my apps with Deployments. So why do I need an Operator?”

That’s a great question and it’s exactly where the difference lies.

For most simple applications like web frontends or APIs — a Deployment (and maybe a Service) is all you need. Kubernetes already knows how to run your Pods, restart them if they fail, and scale them automatically — all thanks to its built-in controllers.

However, not every application is that simple. Some workloads have state, data, or complex dependencies that need special logic — things that Kubernetes’ default controllers can’t manage on their own.

For example:

🗃️ Databases need controlled restarts, replication, and backups.

🔐 Certificates or Secrets must be renewed before they expire.

⚙️ Custom workflows may need to create or connect multiple resources in a specific order.

That’s where an Operator shines. It doesn’t just run your app — it understands it. It knows your application’s logic, lifecycle, and dependencies, and automates everything like an expert system administrator built directly into Kubernetes.

But that raises a few interesting questions:

How does this “robot” actually work?

What’s inside it that lets it understand and react to your app’s state?

How does it know when to act — and what to do?

To answer those, we need to look at the two core building blocks that make every Operator possible: the Controller and the Custom Resource Definition (CRD).

What Is a Controller?

A controller is the heart of every Operator.It’s the part that does the thinking and acting.

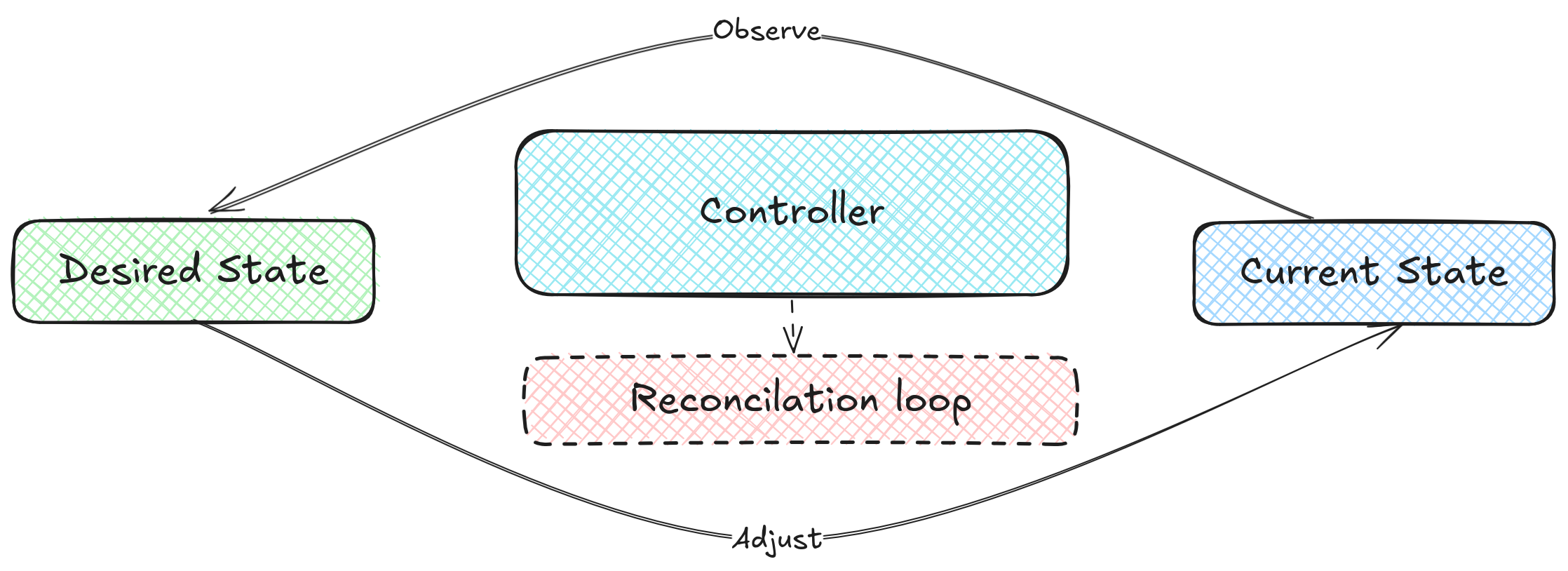

Its job is simple but powerful:

Watch for changes in the cluster

Compare what’s happening (actual state) with what you declared (desired state)

Take action to make them match

This is called the reconciliation loop. It runs continuously, like a heartbeat, keeping your cluster in sync.

💡 Think of a controller like a thermostat:

You set the temperature you want (desired state).

It measures the current temperature (actual state). If it’s too cold, it turns on the heater. In Kubernetes terms: You declare you want 3 Nginx pods. The controller sees only 2 are running. It creates one more to reach 3.

What Is a CRD (Custom Resource Definition)?

A CRD is what makes Operators truly special. It lets you teach Kubernetes new kinds of objects — new words in its vocabulary. By default, Kubernetes understands things like:

- Pod

- Service

- Deployment

But with a CRD, you can define your own resource type:

apiVersion: web.example.com/v1

kind: Website

metadata:

name: my-blog

spec:

image: nginx

replicas: 3

Now Kubernetes understands what a “Website” is, and you can manage it just like any built-in resource.

However, a CRD by itself is just a definition, not behavior. It describes what exists, but not what to do about it. That’s why you need the controller, to give life to your CRD.

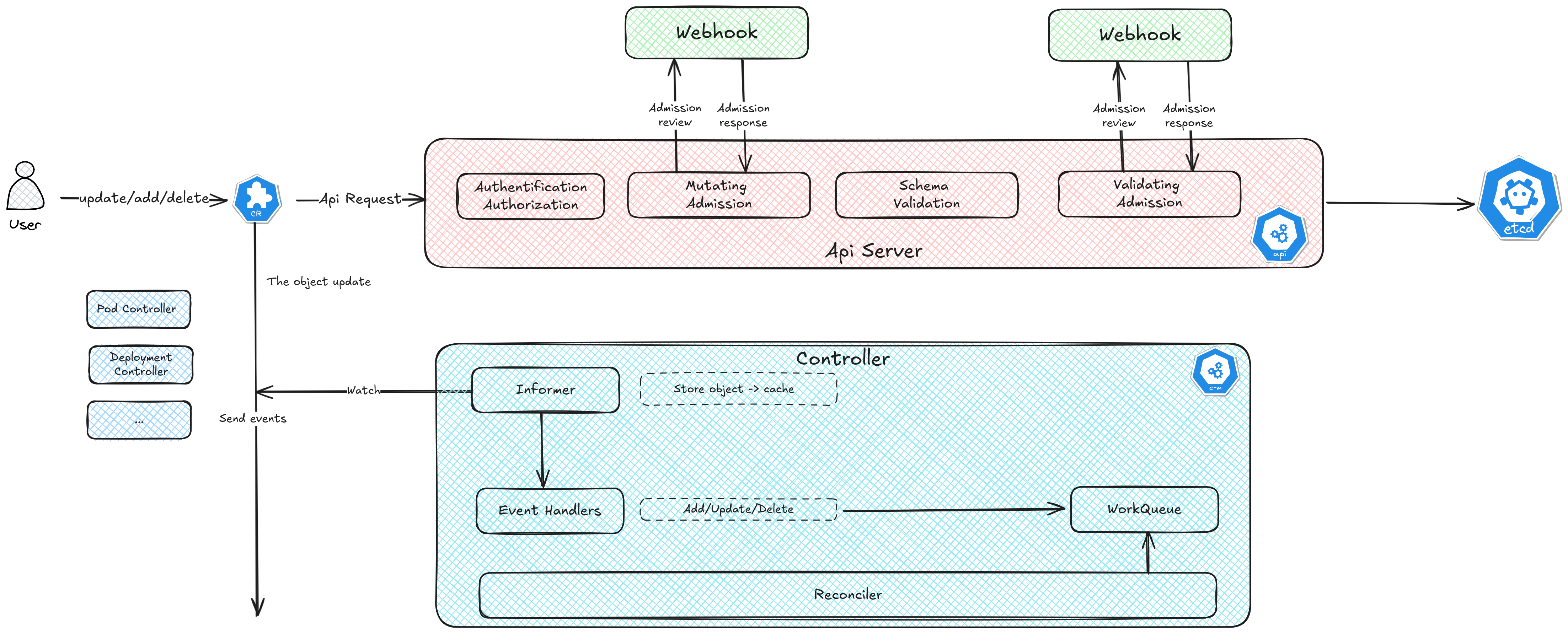

Now lets see all the workflow

If we look a bit deeper inside a controller, we’ll find the real engine that makes it all work: the Reconciler. Every controller in Kubernetes follows the same simple idea — it watches the state of the cluster and tries to make it match what the user wants. The Reconciler is the core function that does exactly that.

Whenever something changes — when an object is created, updated, or deleted — the API Server sends events to the controller. These events are received by an Informer, which keeps a local cache of objects and triggers the Reconciler to start working.

The Reconciler then compares the desired state (what you declared in your YAML) with the actual state (what’s really happening in the cluster). If they don’t match, the controller takes action — it creates, updates, or removes resources until everything is back in sync.

You can think of this as a constant conversation happening inside Kubernetes. The user sends a request (create, update, or delete). The API Server checks and stores it in etcd, then the controller, through the Reconciler, reacts to keep things aligned.

The Reconciler is often called the heartbeat of a controller. It runs every time something changes — or even periodically — to make sure the cluster’s actual state always matches the desired state. This continuous checking process is called the reconciliation loop. In code, it usually appears as a function named Reconcile() that executes whenever an event happens or after a set time.

When it comes to deleting resources, Kubernetes uses something called Finalizers to ensure cleanup happens properly. When an object is deleted, it doesn’t disappear right away. If it has a Finalizer, Kubernetes waits for your controller to do any necessary cleanup — like removing linked resources or external data — before actually deleting it.

For example, if you delete a Website resource, your controller might first delete its related Deployment and Service. Only after that will it remove the Finalizer, letting Kubernetes finish the deletion.

Kubebuilder and the Power of Scaffolding

Now that we understand how a controller works, the next question is:

how do we actually build one?

Kubernetes provides client libraries that let you interact with the cluster programmatically.

The main one is called client-go, which is basically the official Kubernetes SDK for Go.

With it, you can create, update, or delete any Kubernetes resource from your code.

But here’s the challenge — writing a controller from scratch with client-go is complex and repetitive.

You’d have to handle informers, caches, events, reconcilers, leader election, and much more.

It’s powerful, but not developer-friendly.

That’s why the Kubernetes community created Kubebuilder — a high-level framework that makes building controllers and operators much easier.

Kubebuilder uses controller-runtime and client-go under the hood, but gives you a clean project structure and automatically generates most of the boilerplate code for you.

When you create a project with Kubebuilder, it scaffolds everything:

- The project layout and Go modules

- The API and CRD definitions

- The controller logic skeleton

- The configuration and RBAC files

This means you can focus on the Reconcile logic — the brain of your controller — instead of wiring up the low-level Kubernetes details yourself.

Think of it like this:

client-go is the raw SDK, and Kubebuilder is the development framework that helps you build production-ready operators faster, cleaner, and with fewer mistakes.

In the next section, we’ll use Kubebuilder to scaffold our own controller step by step and see how all these pieces come together in real code.

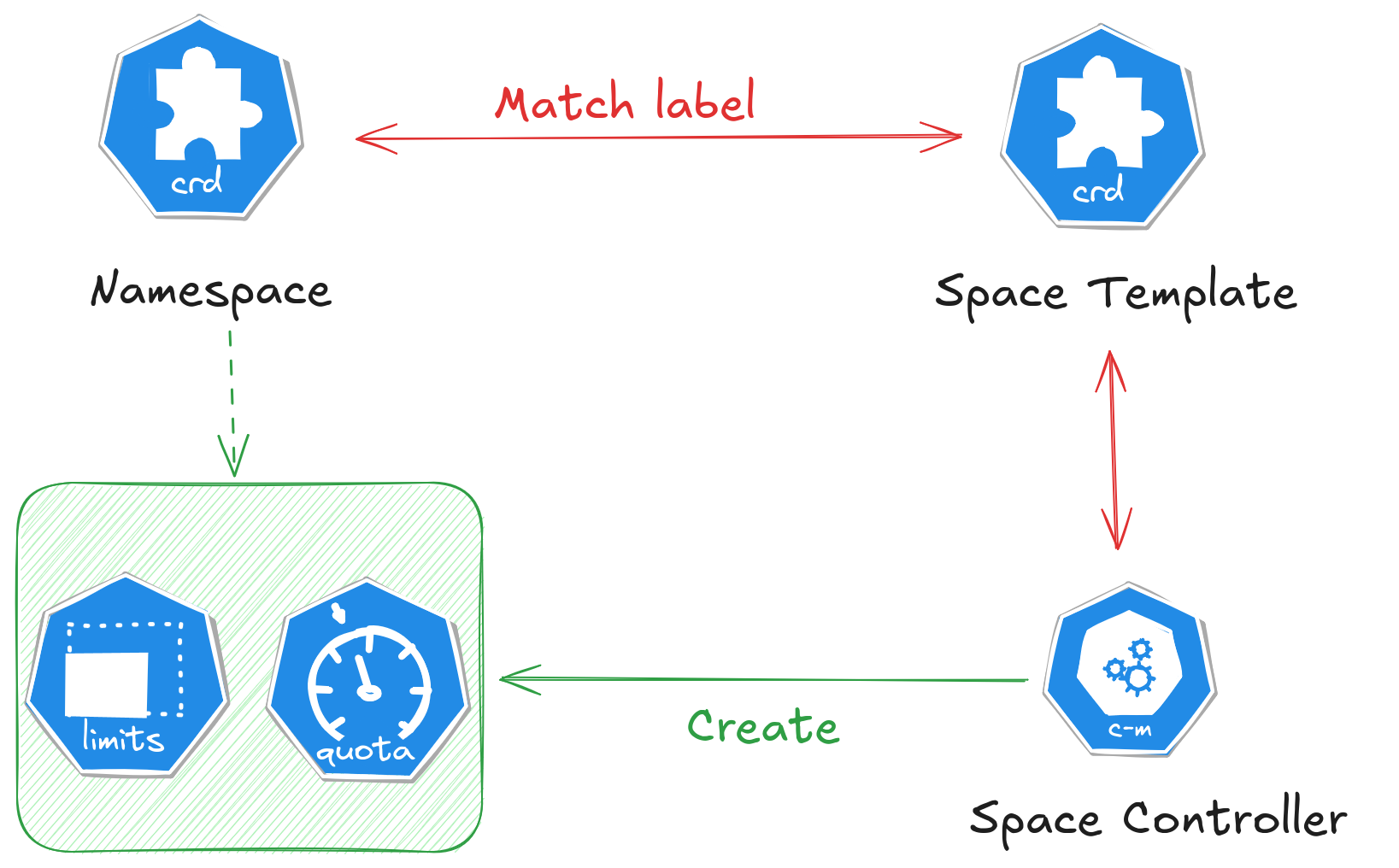

We’re going to build a practical Operator that applies workspace guardrails across namespaces. The idea is simple:

You create a SpaceTemplate (a CRD) that says: “For all namespaces matching this label selector, ensure they have these labels/annotations, a ResourceQuota, and a LimitRange.”

A controller (our Reconciler) watches SpaceTemplate objects and matching Namespaces, then:

Adds/updates labels & annotations,

Creates or updates a ResourceQuota and LimitRange per namespace,

Tracks status (which namespaces were applied),

Cleans up on deletion using finalizers (removing the objects it created and reverting metadata).

Think of it as a policy autopilot for namespaces.

Prerequisites

- Go ≥ 1.22, kubectl, Docker (or compatible), a running Kubernetes cluster (kind/minikube/dev).

KUBECONFIGpoints to your cluster.

go version

kubectl version --client

docker version

Install Kubebuilder

curl -L -o kubebuilder "https://go.kubebuilder.io/dl/latest/$(go env GOOS)/$(go env GOARCH)"

chmod +x kubebuilder && sudo mv kubebuilder /usr/local/bin/

Bootstrap the project

mkdir -p ~/projects/guestbook

cd ~/projects/guestbook

kubebuilder init \

--domain leminnov.com \

--repo github.com/leminnov/NamespaceQuotaBootstrapper

Create the API (CRD) and Controller scaffold

kubebuilder create api \

--group=platform \

--version=v1alpha1 \

--kind=SpaceTemplate

Answer the prompts:

Create Resource? y

Create Controller? y

Kubebuilder generates:

api/v1alpha1/spacetemplate_types.go

internal/controller/spacetemplate_controller.go

Replace api/v1alpha1/spacetemplate_types.go

package v1alpha1

import (

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

type EnforceSpec struct {

OverwriteNamespaceLabels bool `json:"overwriteNamespaceLabels,omitempty"`

OverwriteNamespaceAnnotations bool `json:"overwriteNamespaceAnnotations,omitempty"`

OverwriteResourceQuota bool `json:"overwriteResourceQuota,omitempty"`

OverwriteLimitRange bool `json:"overwriteLimitRange,omitempty"`

}

type SpaceTemplateSpec struct {

// Match namespaces via labels

Selector *metav1.LabelSelector `json:"selector"`

Labels map[string]string `json:"labels,omitempty"`

Annotations map[string]string `json:"annotations,omitempty"`

ResourceQuota *corev1.ResourceQuotaSpec `json:"resourceQuota,omitempty"`

LimitRange *corev1.LimitRangeSpec `json:"limitRange,omitempty"`

Enforce EnforceSpec `json:"enforce,omitempty"`

}

// SpaceTemplateStatus defines the observed state of SpaceTemplate.

type SpaceTemplateStatus struct {

// Kubernetes-standard Conditions field.

// +optional

// +listType=map

// +listMapKey=type

Conditions []metav1.Condition `json:"conditions,omitempty"`

// Namespaces this template most recently applied to.

// +optional

// +listType=set

AppliedNamespaces []string `json:"appliedNamespaces,omitempty"`

// Last time we reconciled this template.

// +optional

LastReconcileTime *metav1.Time `json:"lastReconcileTime,omitempty"`

}

// +kubebuilder:object:root=true

// +kubebuilder:subresource:status

// +kubebuilder:resource:scope=Cluster,shortName=stpl

// SpaceTemplate is the Schema for the spacetemplates API

type SpaceTemplate struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec SpaceTemplateSpec `json:"spec"`

Status SpaceTemplateStatus `json:"status,omitempty"`

}

// +kubebuilder:object:root=true

// SpaceTemplateList contains a list of SpaceTemplate

type SpaceTemplateList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata,omitempty"`

Items []SpaceTemplate `json:"items"`

}

func init() {

SchemeBuilder.Register(&SpaceTemplate{}, &SpaceTemplateList{})

}

internal/controller/spacetemplate_controller.go

package controller

import (

"context"

"encoding/json"

"fmt"

"time"

platformv1alpha1 "github.com/leminnov/NamespaceQuotaBootstrapper/api/v1alpha1"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/labels"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/types"

"k8s.io/apimachinery/pkg/util/sets"

ctrl "sigs.k8s.io/controller-runtime"

"sigs.k8s.io/controller-runtime/pkg/builder"

"sigs.k8s.io/controller-runtime/pkg/client"

"sigs.k8s.io/controller-runtime/pkg/controller/controllerutil"

"sigs.k8s.io/controller-runtime/pkg/event"

"sigs.k8s.io/controller-runtime/pkg/handler"

"sigs.k8s.io/controller-runtime/pkg/log"

"sigs.k8s.io/controller-runtime/pkg/predicate"

"sigs.k8s.io/controller-runtime/pkg/reconcile"

)

const (

finalizerName = "spacetemplate.platform.leminnov.com/finalizer"

// Namespace-level annotations to track what we injected

annOwnedLabelsKey = "platform.leminnov.com/stpl.%s.owned-labels" // JSON: []string

annOwnedAnnotationsKey = "platform.leminnov.com/stpl.%s.owned-annotations" // JSON: []string

)

// SpaceTemplateReconciler reconciles a SpaceTemplate object

type SpaceTemplateReconciler struct {

client.Client

Scheme *runtime.Scheme

}

// +kubebuilder:rbac:groups=platform.leminnov.com,resources=spacetemplates,verbs=get;list;watch;create;update;patch;delete

// +kubebuilder:rbac:groups=platform.leminnov.com,resources=spacetemplates/status,verbs=get;update;patch

// +kubebuilder:rbac:groups=platform.leminnov.com,resources=spacetemplates/finalizers,verbs=update

// +kubebuilder:rbac:groups="",resources=namespaces,verbs=get;list;watch;create;update;patch;delete

// +kubebuilder:rbac:groups="",resources=resourcequotas,verbs=get;list;watch;create;update;patch;delete

// +kubebuilder:rbac:groups="",resources=limitranges,verbs=get;list;watch;create;update;patch;delete

// Reconcile is part of the main kubernetes reconciliation loop which aims to

// move the current state of the cluster closer to the desired state.

// TODO(user): Modify the Reconcile function to compare the state specified by

// the SpaceTemplate object against the actual cluster state, and then

// perform operations to make the cluster state reflect the state specified by

// the user.

//

// For more details, check Reconcile and its Result here:

// - https://pkg.go.dev/sigs.k8s.io/controller-runtime@v0.22.1/pkg/reconcile

func (r *SpaceTemplateReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

l := log.FromContext(ctx)

l.Info("Reconciler LOOP started")

st := &platformv1alpha1.SpaceTemplate{}

if err := r.Get(ctx, req.NamespacedName, st); err != nil {

// Return on any error; controller-runtime will requeue on non-NotFound

return ctrl.Result{}, client.IgnoreNotFound(err)

}

// --- Deletion flow: run cleanup then remove finalizer

if !st.DeletionTimestamp.IsZero() {

if controllerutil.ContainsFinalizer(st, finalizerName) {

if err := r.cleanupAll(ctx, st); err != nil {

l.Error(err, "cleanup failed, will retry")

return ctrl.Result{RequeueAfter: 2 * time.Second}, nil

}

controllerutil.RemoveFinalizer(st, finalizerName)

if err := r.Update(ctx, st); err != nil {

return ctrl.Result{}, err

}

}

return ctrl.Result{}, nil

}

// --- Ensure finalizer once

if !controllerutil.ContainsFinalizer(st, finalizerName) {

controllerutil.AddFinalizer(st, finalizerName)

if err := r.Update(ctx, st); err != nil {

l.Error(err, "Failed to add finalizer")

return ctrl.Result{Requeue: true}, err

}

// return here; the next reconcile will continue with a stable object

return ctrl.Result{}, nil

}

// --- Normal reconcile

sel, err := metav1LabelSelectorAsSelector(st.Spec.Selector)

if err != nil {

l.Error(err, "invalid label selector")

return ctrl.Result{}, nil

}

var nsList corev1.NamespaceList

if err := r.List(ctx, &nsList, &client.ListOptions{LabelSelector: sel}); err != nil {

return ctrl.Result{}, err

}

applied := sets.New[string]()

for i := range nsList.Items {

ns := &nsList.Items[i]

if err := r.ensureNamespaceMeta(ctx, ns, st); err != nil {

l.Error(err, "ensuring namespace meta", "namespace", ns.Name)

return ctrl.Result{}, err

}

if st.Spec.ResourceQuota != nil {

if err := r.ensureResourceQuota(ctx, ns.Name, st); err != nil {

l.Error(err, "ensuring resourcequota", "namespace", ns.Name)

return ctrl.Result{}, err

}

}

if st.Spec.LimitRange != nil {

if err := r.ensureLimitRange(ctx, ns.Name, st); err != nil {

l.Error(err, "ensuring limitrange", "namespace", ns.Name)

return ctrl.Result{}, err

}

}

applied.Insert(ns.Name)

}

// --- Update status only if it changed

newApplied := sets.List(applied)

if !stringSlicesEqual(st.Status.AppliedNamespaces, newApplied) || st.Status.LastReconcileTime == nil {

st.Status.AppliedNamespaces = newApplied

now := metav1Now()

st.Status.LastReconcileTime = &now

if err := r.Status().Update(ctx, st); err != nil {

l.Info("status update conflict; requeueing", "error", err)

return ctrl.Result{RequeueAfter: 2 * time.Second}, nil

}

}

return ctrl.Result{}, nil

}

func stringSlicesEqual(a, b []string) bool {

if len(a) != len(b) {

return false

}

for i := range a {

if a[i] != b[i] {

return false

}

}

return true

}

func (r *SpaceTemplateReconciler) ensureNamespaceMeta(ctx context.Context, ns *corev1.Namespace, st *platformv1alpha1.SpaceTemplate) error {

mutate := func() error {

if ns.Labels == nil {

ns.Labels = map[string]string{}

}

if ns.Annotations == nil {

ns.Annotations = map[string]string{}

}

// Labels

for k, v := range st.Spec.Labels {

if st.Spec.Enforce.OverwriteNamespaceLabels || ns.Labels[k] == "" {

ns.Labels[k] = v

}

}

// Annotations

for k, v := range st.Spec.Annotations {

if st.Spec.Enforce.OverwriteNamespaceAnnotations || ns.Annotations[k] == "" {

ns.Annotations[k] = v

}

}

return nil

}

before := ns.DeepCopy()

if err := mutate(); err != nil {

return err

}

return r.Patch(ctx, ns, client.MergeFrom(before))

}

func (r *SpaceTemplateReconciler) ensureResourceQuota(ctx context.Context, namespace string, st *platformv1alpha1.SpaceTemplate) error {

rqName := fmt.Sprintf("st-%s", st.Name)

var rq corev1.ResourceQuota

err := r.Get(ctx, types.NamespacedName{Namespace: namespace, Name: rqName}, &rq)

if errors.IsNotFound(err) {

rq = corev1.ResourceQuota{

ObjectMeta: metav1.ObjectMeta{

Name: rqName,

Namespace: namespace,

},

Spec: *st.Spec.ResourceQuota,

}

// Set controller ref (soft ownership)

_ = controllerutil.SetOwnerReference(st, &rq, r.Scheme)

return r.Create(ctx, &rq)

} else if err != nil {

return err

}

// Patch existing if overwrite enabled

if st.Spec.Enforce.OverwriteResourceQuota {

before := rq.DeepCopy()

rq.Spec = *st.Spec.ResourceQuota

return r.Patch(ctx, &rq, client.MergeFrom(before))

}

return nil

}

func (r *SpaceTemplateReconciler) ensureLimitRange(ctx context.Context, namespace string, st *platformv1alpha1.SpaceTemplate) error {

lrName := fmt.Sprintf("st-%s", st.Name)

var lr corev1.LimitRange

err := r.Get(ctx, types.NamespacedName{Namespace: namespace, Name: lrName}, &lr)

if errors.IsNotFound(err) {

lr = corev1.LimitRange{

ObjectMeta: metav1.ObjectMeta{

Name: lrName,

Namespace: namespace,

},

Spec: *st.Spec.LimitRange,

}

_ = controllerutil.SetOwnerReference(st, &lr, r.Scheme)

return r.Create(ctx, &lr)

} else if err != nil {

return err

}

if st.Spec.Enforce.OverwriteLimitRange {

before := lr.DeepCopy()

lr.Spec = *st.Spec.LimitRange

return r.Patch(ctx, &lr, client.MergeFrom(before))

}

return nil

}

// SetupWithManager wires watches:

// - Reconcile when SpaceTemplate changes

// - Reconcile ALL SpaceTemplates when a Namespace changes (simple & safe)

func (r *SpaceTemplateReconciler) SetupWithManager(mgr ctrl.Manager) error {

// Map Namespace events -> SpaceTemplate reconciles (filter to matching templates if you want)

mapNS := handler.EnqueueRequestsFromMapFunc(func(ctx context.Context, obj client.Object) []reconcile.Request {

_, ok := obj.(*corev1.Namespace)

if !ok {

return nil

}

var list platformv1alpha1.SpaceTemplateList

if err := r.List(ctx, &list); err != nil {

log.FromContext(ctx).Error(err, "list SpaceTemplates failed")

return nil

}

// simple: enqueue all templates (fine if small count)

reqs := make([]reconcile.Request, 0, len(list.Items))

for i := range list.Items {

reqs = append(reqs, reconcile.Request{

NamespacedName: types.NamespacedName{Name: list.Items[i].Name},

})

}

return reqs

})

// Only react when NS labels/annotations change (reduce noise)

nsPred := predicate.Funcs{

UpdateFunc: func(e event.UpdateEvent) bool {

oldNS, ok1 := e.ObjectOld.(*corev1.Namespace)

newNS, ok2 := e.ObjectNew.(*corev1.Namespace)

if !ok1 || !ok2 {

return false

}

return !mapsEqual(oldNS.Labels, newNS.Labels) ||

!mapsEqual(oldNS.Annotations, newNS.Annotations)

},

CreateFunc: func(e event.CreateEvent) bool { return true },

DeleteFunc: func(e event.DeleteEvent) bool { return true }, // set false if you don’t need it

GenericFunc: func(e event.GenericEvent) bool { return false },

}

return ctrl.NewControllerManagedBy(mgr).

For(&platformv1alpha1.SpaceTemplate{}).

Watches(&corev1.Namespace{}, mapNS, builder.WithPredicates(nsPred)).

// Optionally: .WithOptions(controller.Options{MaxConcurrentReconciles: 4})

Complete(r)

} //

// Helpers

//

func metav1LabelSelectorAsSelector(sel *metav1.LabelSelector) (labels.Selector, error) {

if sel == nil { // empty matches nothing; choose MatchAll?

return labels.Everything(), nil

}

return metav1.LabelSelectorAsSelector(sel)

}

func metav1Now() metav1.Time {

return metav1.NewTime(time.Now().UTC())

}

func mapsEqual(a, b map[string]string) bool {

if len(a) != len(b) {

return false

}

for k, v := range a {

if b[k] != v {

return false

}

}

return true

}

// Small glue to keep predicates optional without importing builder directly in signature

func (r *SpaceTemplateReconciler) cleanupAll(ctx context.Context, st *platformv1alpha1.SpaceTemplate) error {

// Find namespaces that *currently* match the selector.

// Optional: also fetch from st.Status.AppliedNamespaces to be thorough even if labels changed.

sel, _ := metav1LabelSelectorAsSelector(st.Spec.Selector)

var nsList corev1.NamespaceList

if err := r.List(ctx, &nsList, &client.ListOptions{LabelSelector: sel}); err != nil {

return err

}

// Union with status-applied to avoid orphaning

want := sets.New[string]()

for i := range nsList.Items {

want.Insert(nsList.Items[i].Name)

}

for _, n := range st.Status.AppliedNamespaces {

want.Insert(n)

}

// Per-namespace cleanup

for ns := range want {

if err := r.deleteRQ(ctx, ns, st.Name); err != nil && !errors.IsNotFound(err) {

return fmt.Errorf("delete RQ in %s: %w", ns, err)

}

if err := r.deleteLR(ctx, ns, st.Name); err != nil && !errors.IsNotFound(err) {

return fmt.Errorf("delete LR in %s: %w", ns, err)

}

if err := r.revertNamespaceMeta(ctx, ns, st.Name); err != nil {

return fmt.Errorf("revert ns meta in %s: %w", ns, err)

}

}

return nil

}

func (r *SpaceTemplateReconciler) deleteRQ(ctx context.Context, namespace, stName string) error {

rqName := fmt.Sprintf("st-%s", stName)

rq := &corev1.ResourceQuota{ObjectMeta: metav1.ObjectMeta{Name: rqName, Namespace: namespace}}

return r.Delete(ctx, rq)

}

func (r *SpaceTemplateReconciler) deleteLR(ctx context.Context, namespace, stName string) error {

lrName := fmt.Sprintf("st-%s", stName)

lr := &corev1.LimitRange{ObjectMeta: metav1.ObjectMeta{Name: lrName, Namespace: namespace}}

return r.Delete(ctx, lr)

}

func (r *SpaceTemplateReconciler) revertNamespaceMeta(ctx context.Context, namespace, stName string) error {

var ns corev1.Namespace

if err := r.Get(ctx, types.NamespacedName{Name: namespace}, &ns); err != nil {

if errors.IsNotFound(err) {

return nil

}

return err

}

before := ns.DeepCopy()

if ns.Annotations != nil {

labelsKey := fmt.Sprintf(annOwnedLabelsKey, stName)

if keys, ok := loadOwnedKeys(ns.Annotations[labelsKey]); ok {

if ns.Labels != nil {

for _, k := range keys {

delete(ns.Labels, k)

}

}

delete(ns.Annotations, labelsKey)

}

annsKey := fmt.Sprintf(annOwnedAnnotationsKey, stName)

if keys, ok := loadOwnedKeys(ns.Annotations[annsKey]); ok {

for _, k := range keys {

delete(ns.Annotations, k)

}

delete(ns.Annotations, annsKey)

}

}

return r.Patch(ctx, &ns, client.MergeFrom(before))

}

func loadOwnedKeys(raw string) ([]string, bool) {

if raw == "" {

return nil, false

}

var arr []string

if err := json.Unmarshal([]byte(raw), &arr); err != nil {

return nil, false

}

return arr, true

}

Generate code, render manifests, then install CRDs

make generate

make manifests

make install # creates the CRDs in your cluster

Run locally (dev)

make run

Build and push the image

export IMG=<your-registry>/<project-name>:<tag>

make docker-build IMG=$IMG

make docker-push IMG=$IMG

Deploy the operator

make deploy IMG=$IMG

Check:

kubectl get crds | grep spacetemplates

kubectl -n namespace-quota-bootstrapper-system get deploy,po

Sample CR to test

apiVersion: platform.leminnov.com/v1alpha1

kind: SpaceTemplate

metadata:

name: team-defaults

spec:

selector:

matchLabels:

team: backend

labels:

platform.leminnov.com/owner: "platform-team"

annotations:

platform.leminnov.com/cost-center: "cc-42"

resourceQuota:

hard:

pods: "20"

requests.cpu: "4"

requests.memory: "8Gi"

limits.cpu: "8"

limits.memory: "16Gi"

limitRange:

limits:

- type: Container

defaultRequest:

cpu: "100m"

memory: "128Mi"

default:

cpu: "500m"

memory: "512Mi"

enforce:

overwriteNamespaceLabels: true

overwriteNamespaceAnnotations: true

overwriteResourceQuota: true

overwriteLimitRange: true

Apply and test:

kubectl apply -f config/samples/platform_v1alpha1_spacetemplate.yaml

kubectl label ns my-team-ns team=backend --overwrite

kubectl -n my-team-ns get resourcequota,limitrange